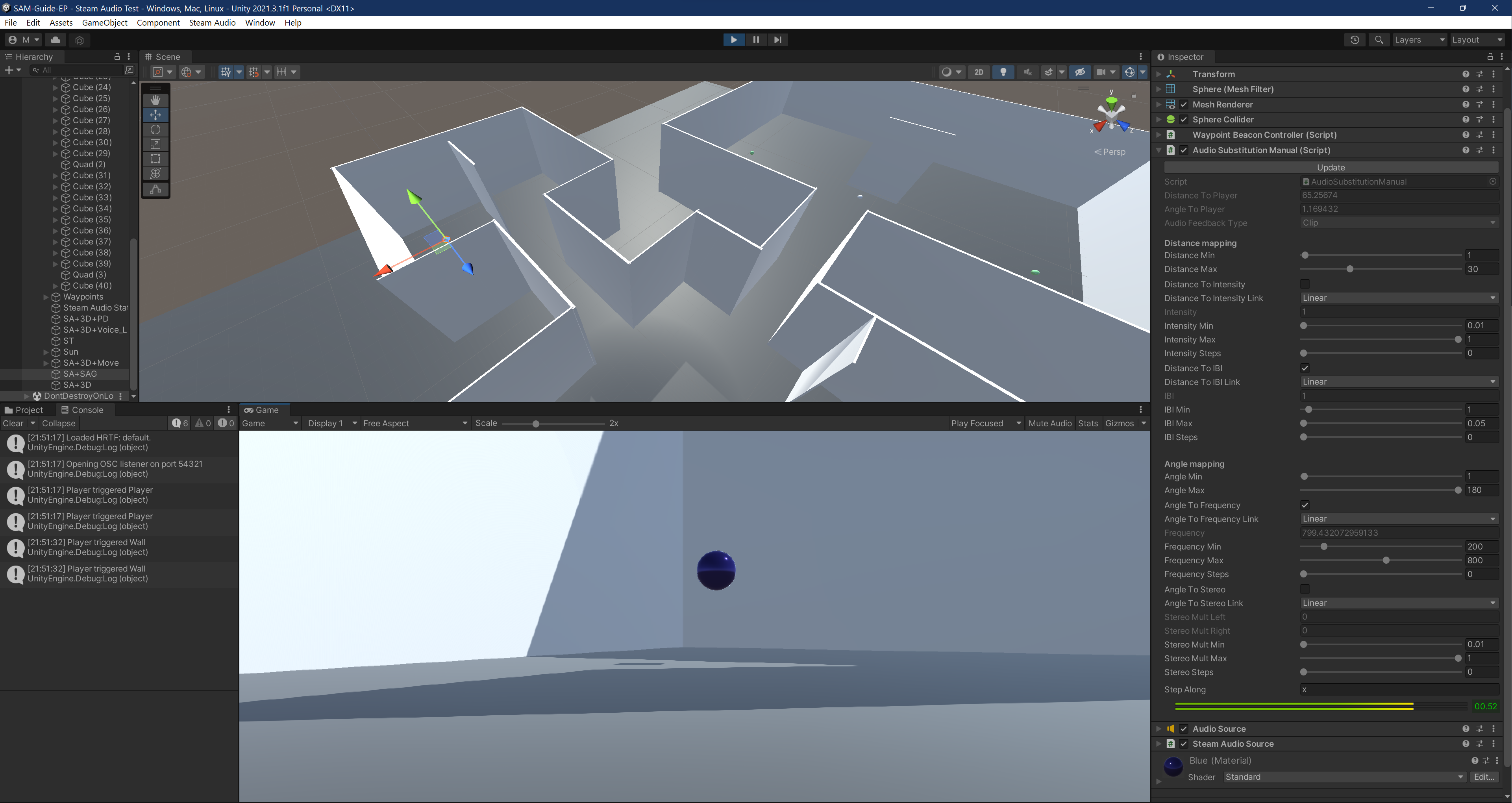

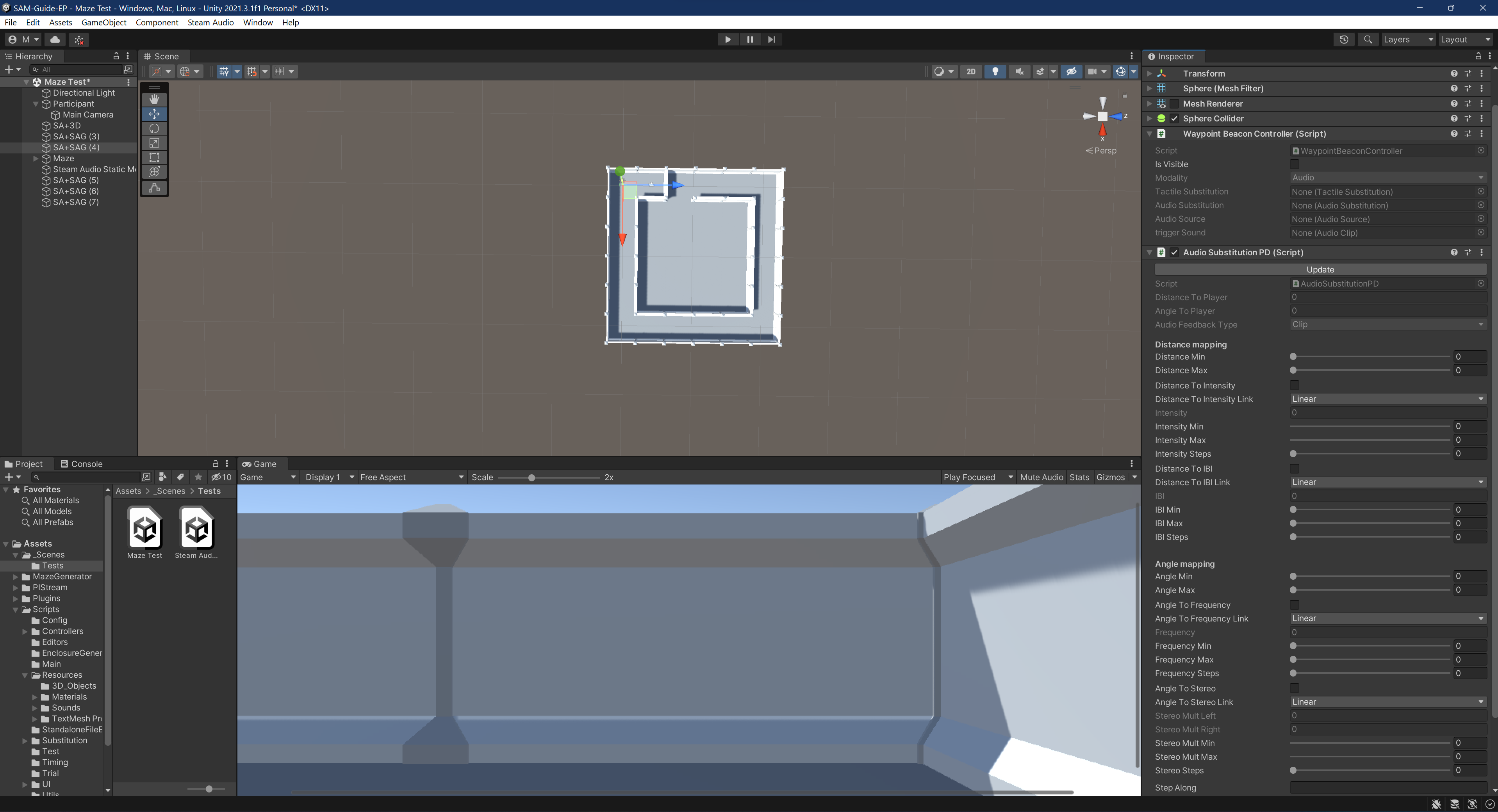

The platform uses Unity, connects to various motion tracking devices used by the consortium (Polhemus, VICON, pozyx), uses PureData for sound-wave generation and Steam Audio for 3D audio modeling, and communicates with the consortium’s non-visual interfaces wirelessly.

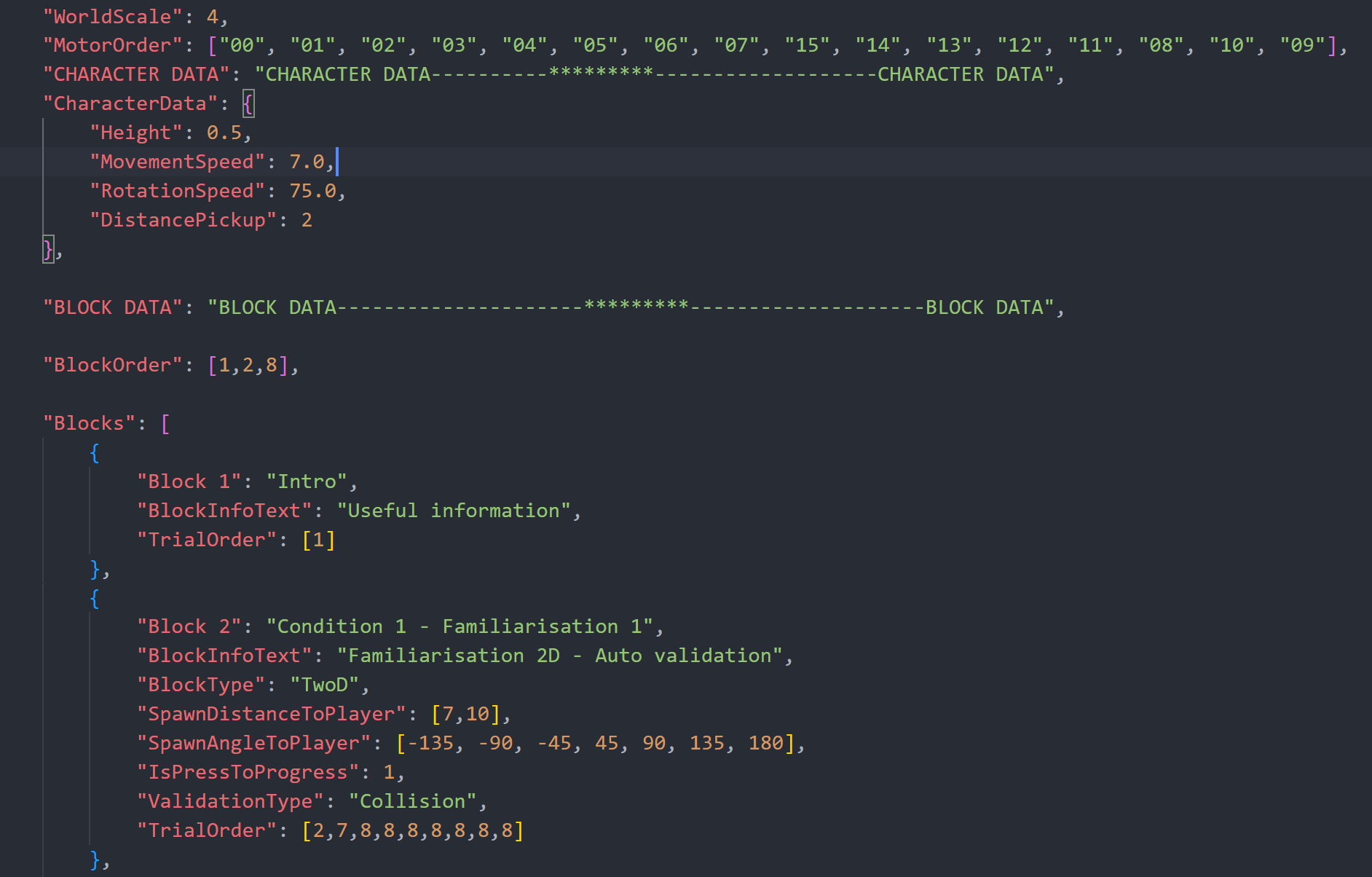

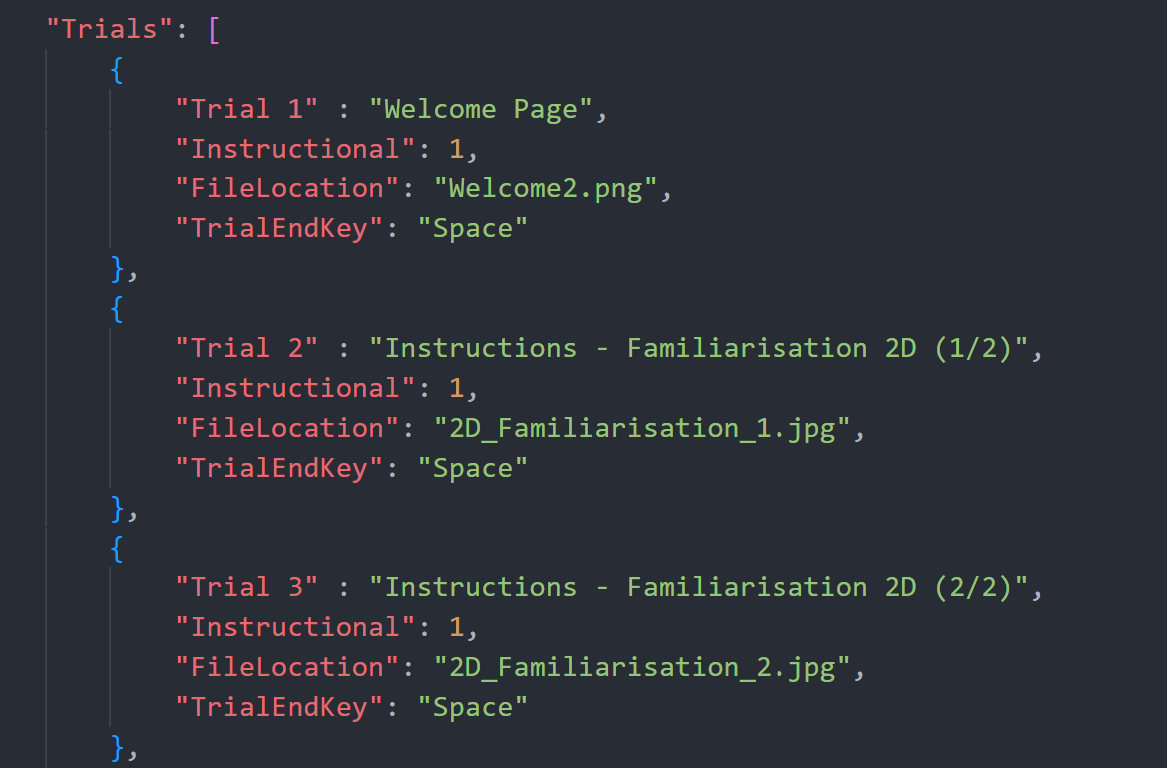

This platform allows one to easily spin up experimental trials by specifying the desired characteristics in a JSON file (based on the OpenMaze project). Unity will automatically generate the trial’s environment according to those specifications and populate it with the relevant items (e.g. a tactile-signal emitting beacon signalling a target to reach in a maze), handle the transition between successive trials and blocks of trials, and log all the relevant user metrics into a data file.