This website is currently under construction.

1 The Project

1.1 SAM-Guide in a nutshell

SAM-Guide’s high level objective is to efficiently assist Visually Impaired People (VIP) in tasks that require interactions with space. It aims to develop a multimodal interface to assist VIP during different types of spatial interactions, including object reaching, large-scale navigation (indoor and outdoor), and outdoor sport activities.

SAM-Guide aims to study and model how to optimally supplement vision with both auditory and tactile feedback, re-framing spatial interactions as target-reaching affordances, and symbolizing spatial properties by 3D ego-centered beacons. Candidate encoding schemes will be evaluated through :Augmented Reality (AR) serious games relying on motion capture platforms and indoor localisation solutions to track the user’s movements.

1.2 SAM-Guide’s inception

This project was born from the collaboration of 4 different teams (split into 3 different sites), which have been independently studying and developing assistive devices for VIP for many years, each bringing complementary expertise:

The “AdViS” (Adaptive Visual Substitution) multidisciplinary team consists of two specialists in computer science, signal processing, and electronics (S. Huet and D. Pellerin), and one specialist in biology, cognitive neurosciences, and psychophysics (C. Graff).

They have been working together for several years on a modular audio-visual SSD (Guezou-Philippe et al., 2018; Stoll et al., 2015). Their current endeavor revolves around the virtual prototyping of SSDs using motion capture (VICON) and AR to easily emulate both the testing environment, the SSD components, and implement various spatial-to-sound transcoding solutions (Giroux et al., 2021).

- The X-audio team from the CMAP laboratory at Ecole Polytechnique.

The X-audio team has developed state-of-the-art numerical algorithms and a complete software suite for the numerical simulation of acoustic scattering, binaural sound, reverberation and real-time rendering. They currently study the guidance of VIP using 3D sounds during sports (such as running or roller skating), with encouraging results. This system relies on a virtual mobile guiding beacon, moving in front of the user, providing spatialized audio cues about its position (Ferrand et al., 2018, 2020). Those audio cues include a virtual guide (similar to Legend of Zelda guiding fairy) which VIPs follow while running in order to stay on track.

They have also developed expertise in sensor network tracking and data fusion for robust real-time positioning, and are currently conducting tests to apply real-time audio guidance to laser-gun aiming, for which they have developed a working prototype.

NU’s team comprises a specialist in biomedical engineering & electronics (E. Pissaloux), and two in cognitive ergonomics & human movement sciences (E. Faugloire, B. Mantel).

Both partners have worked for many years on assistive devices for VIP, and currently focus on supplementing the spatial cognition capabilities of VIP through the development of tactile interfaces for autonomous orientation and navigation (Faugloire & Lejeune, 2014; Rivière et al., 2018), map comprehension (Riviere et al., 2019), but also access to art (Pissaloux & Velázquez, 2018).

NU is currently working with a vibrotactile belt that provides ego-centered orientation information on relevant environmental cues (allowing VIP to localize and orient themselves in autonomy), and a Force-Feedback Tablet that allows the exploration of 2D maps & images (Gay et al., 2018).

Other foci of their research are the optimization of the tactile encoding of remote cues (Faugloire et al., 2022) using motion capture systems (i.e. Polhemus Fastrak) in AR, ecological modes of responses, and whole-body movements affordance-based HMI design (Mantel et al., 2012; Stoffregen & Mantel, 2015).

1.3 Our philosophy

TODO

1.4 Our objectives

TODO

Design an experimental platform to allow …

Start testing …

2 The Theory

TODO

Interacting with space is a constant challenge for Visually Impaired People (VIP) since spatial information in Humans is typically provided by vision. :Sensory Substitution Devices (SSD) have been promising :Human-Machine Interfaces (HMI) to assist VIP. They re-code missing visual information as stimuli for other sensory channels. Our project redirects somehow from SSD’s initial ambition for a single universal integrated device that would replace the whole sense organ, towards common encoding schemes for multiple applications.

SAM-Guide will search for the most natural way to give online access to geometric variables that are necessary to achieve a range of tasks without eyes. Defining such encoding schemes requires selecting a crucial set of geometrical variables, and building efficient and comfortable auditory and/or tactile signals to represent them. We propose to concentrate on action-perception loops representing target-reaching affordances, where spatial properties are defined as ego-centered deviations from selected beacons.

The same grammar of cues could better help VIP to get autonomy along with a range of vital or leisure activities. Among such activities, the consortium has advances in orienting and navigating, object locating and reaching, laser shooting. Based on current neurocognitive models of human action-perception and spatial cognition, the design of the encoding schemes will lay on common theoretical principles: parsimony (minimum yet sufficient information for a task), congruency (leverage existing sensorimotor control laws), and multimodality (redundant or complementary signals across modalities). To ensure an efficient collaboration all partners will develop and evaluate their transcoding schemes based on common principles, methodology, and tools. An inclusive user-centered “living-lab” approach will ensure constant adequacy of our solutions with VIP’s needs.

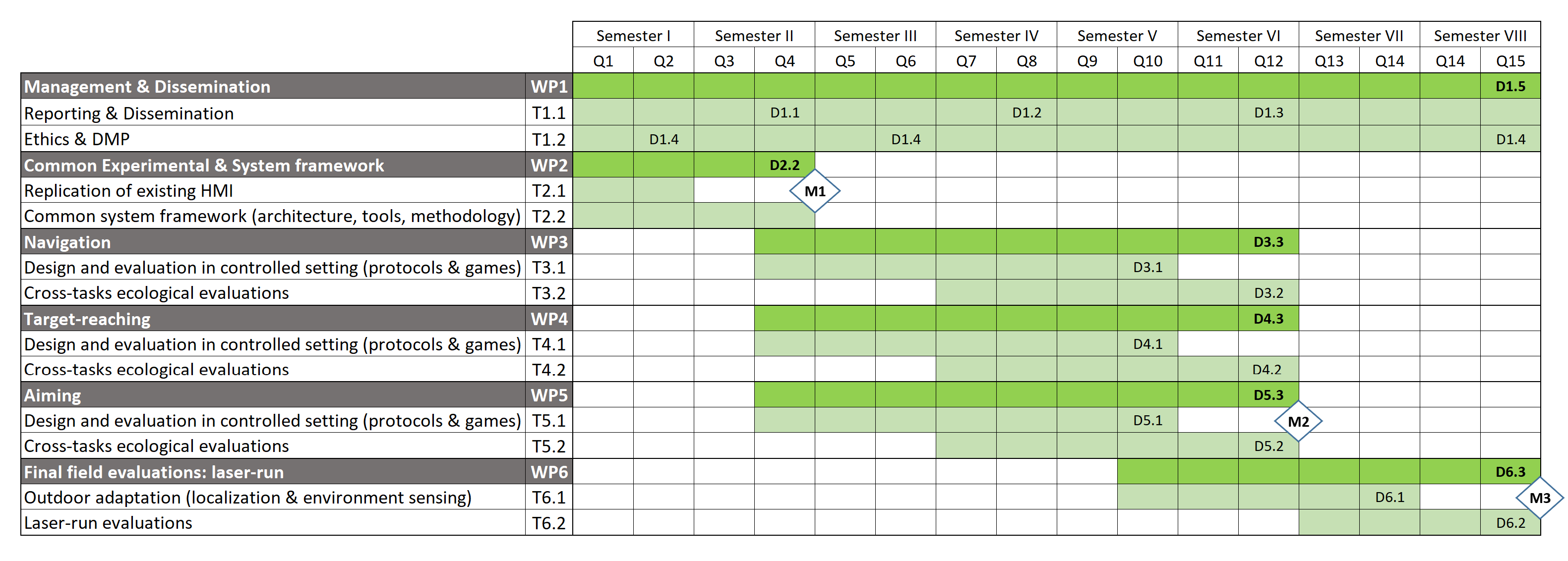

Five labs (three campuses) comprising ergonomists, neuroscientists, engineers, and mathematicians, united by their interest and experience with designing assistive devices for VIP, will duplicate, combine and share their pre-existing SSDs prototypes: a vibrotactile navigation belt, an audio-spatialized virtual guide for jogging, and an object-reaching sonic pointer. Using those prototypes, they will iteratively evaluate and improve their transcoding schemes in a 3-phase approach: First, in controlled experimental settings through augmented-reality serious games in motion capture (virtual prototyping indeed facilitates the creation of ad-hoc environments, and gaming eases the participants’ engagement). Next, spatial interaction subtasks will be progressively combined and tested in wider and more ecological indoor and outdoor environments. Finally, SAM-Guide’s system will be fully transitioned to real-world conditions through a friendly sporting event of laser-run, a novel handi-sport, which will involve each subtask.

SAM-Guide will develop action-perception and spatial cognition theories relevant to non-visual interfaces. It will provide guidelines for the efficient representation of spatial interactions to facilitate the emergence of spatial awareness in a task-oriented perspective. Our portable modular transcoding libraries are independent of hardware consideration. The principled experimental platform offered by AR games will be a tool for evaluating VIP spatial cognition, and novel strategies for mobility training.

3 Our Work

TODO